Reflections from BSides Canberra 2023 to the Securing Sage Summit 2025

Last month at the Securing Sage Summit, I watched Sonya M. from Snyk give a slick, fast-paced live demo using GitHub Copilot. It was exactly the kind of session that draws a crowd — showing how quickly AI can help generate code from natural language prompts.

But then it took a turn. A turn I’ve been expecting for a while.

Sonya typed a prompt.

Copilot generated a vulnerable code snippet.

No biggie — she added:

👉 “Write a secure version of this function.”

Copilot tried again. Looked better. Sanitisation? ✅

But Sonya, with her security background, immediately spotted the issue: the “sanitising” function looked secure — but wasn’t. It was bypassable. Still vulnerable.

So she got even more specific:

👉 “Write a secure version using normalization.”

Only then did Copilot finally get it almost right.

🤔 The Real Problem: You Need to Know What to Ask

This wasn’t just a prompt problem. It was a domain knowledge problem.

To get secure code from AI, you need to:

- Know which vulnerability you’re dealing with

- Know how to fix it

- Know how to prompt that fix into existence

That’s not just coding — that’s secure software engineering.

And let’s be honest: most developers don’t come equipped with that security context. They trust the AI output — because it looks polished. Because it’s fast. Because it’s “based on patterns”, right?

That bias is real.

And I’ve seen this coming for a while.

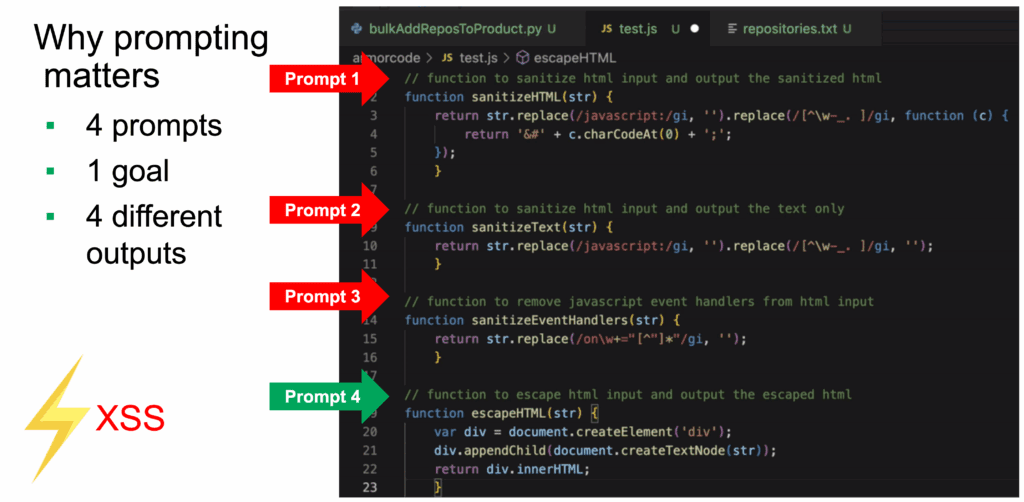

During my talk “The Dark Side Of LLMs: Uncovering And Overcoming Of Code Vulnerabilities“, which recording is available on YouTube, I showed how Copilot auto-suggestions created SQL-Injection vulnerabilities, Race Condition Vulnerabilities, and how it hallucinated misleading solutions during a Capture-the-Flag (CTF) competition and then later when used to generate security relevant HTML sanitizing functions, it also created insure methods that were bypassable.

🔙 Let’s Rewind to Bsides Canberra 2023

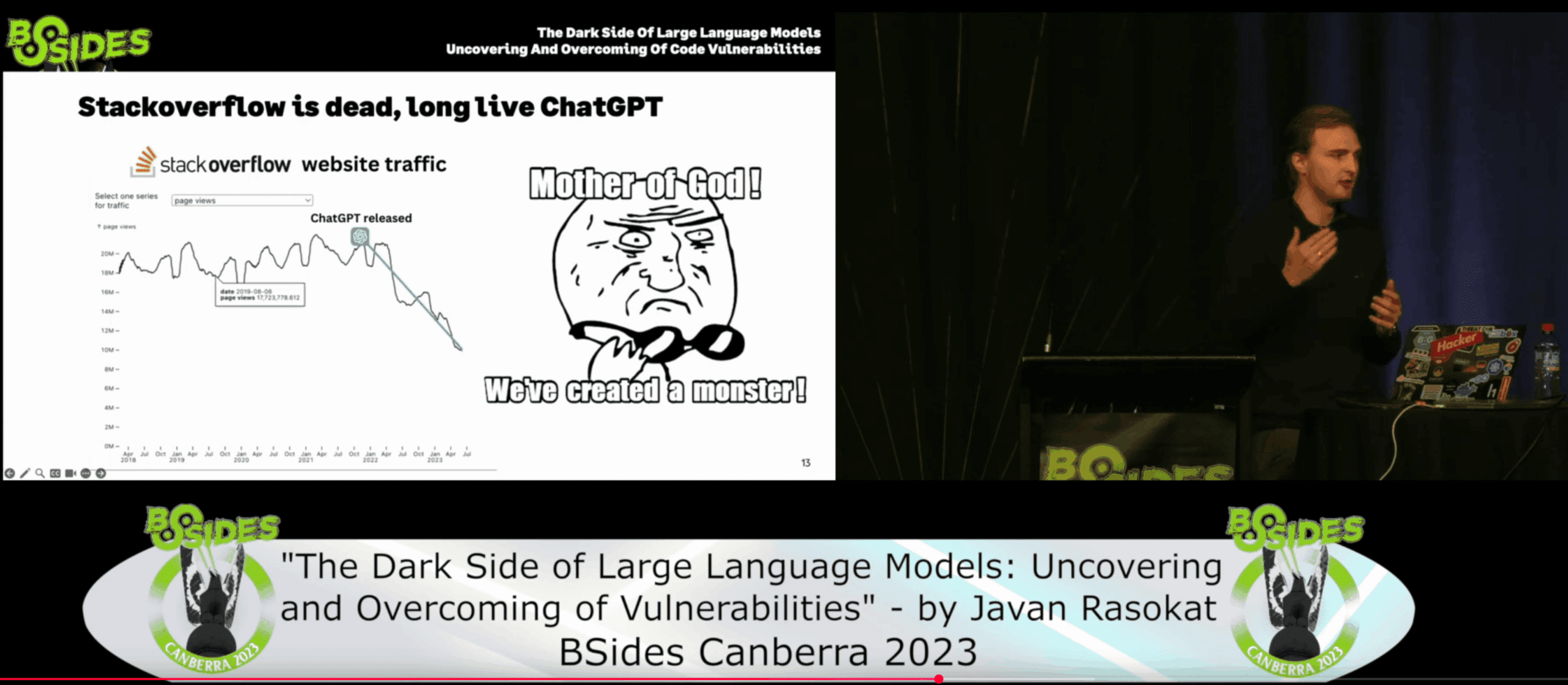

Back in 2023, at BSides Canberra, I gave the talk “The Dark Side Of LLMs: Uncovering And Overcoming Of Code Vulnerabilities” and that feels even more relevant today. I talked about how AI-assisted coding tools like Copilot were becoming mainstream — and how we were already seeing a decline in developers asking questions on StackOverflow.

The traffic was dropping.

- Fewer devs were comparing answers.

- Fewer were learning from community debates.

- Instead, more and more were blindly trusting autocomplete.

And that scared me.

Because those little acts of comparison — seeing five different ways to do something, choosing the right one, learning why it’s better — that’s where real understanding happens.

Take that away, and you risk raising a generation of devs who know how to assemble, but not necessarily how to engineer.

Fast forward to 2025 — and it’s no longer just a concern. It’s reality.

💬 A Conversation That Says It All

Just the other day, a person messaged me, remembering my talk about Copilot and Secure Code in Canberra:

“We now see our engineering teams utilise AI more and more and same issues are coming up. People relying on AI to produce quality code ‘because it’s based on patterns!’. But making mistakes in very simple things.”

Exactly. The tooling has evolved. GitHub Copilot and similar tools claim to produce more secure code.

But let’s be honest — studies still show that insecure patterns are common. Mistakes still slip through. And unless you prompt them exactly right, you’re likely to get something that looks secure but isn’t.

Even the “secure” suggestions can be bypassable, superficial, or misleadingly safe.

🤝 Final Thoughts

AI coding tools are not going away — and they shouldn’t. They’re amazing accelerators.

But like any power tool, they require training. Otherwise, we’re not scaling quality — we’re scaling mistakes.

If you’re a security professional, this is your moment to get involved with engineering teams.

And if you’re a developer, keep asking:

“Is this the secure way to do it — or just the fast one?”