This week, the students in my Security Hackathon class at DHBW presented their final projects. The format was simple: each group selected one of the OWASP Top 10 Proactive Controls and explored it in depth.

The results were more than I had hoped for.

While it wasn’t required to build a working project, many groups chose to go beyond expectations, turning security theory into practical, demonstrable MVPs (Minimum viable product).

From Theory to MVPs: What the Students Delivered

Out of 12 groups:

- 7 created a working MVP, not just slides or code snippets.

- 5 of those 7 used AI-assisted coding tools, like GitHub Copilot or Cursor.

- Only one group coded entirely in plain PHP, no fancy AI help.

The projects were creative and diverse:

- A secure To-Do list app that showcased secure input handling.

- An Uno card game high score tracker, built to demonstrate SSRF attacks and their prevention.

- A canteen card app, to show CSRF attacks and their prevention.

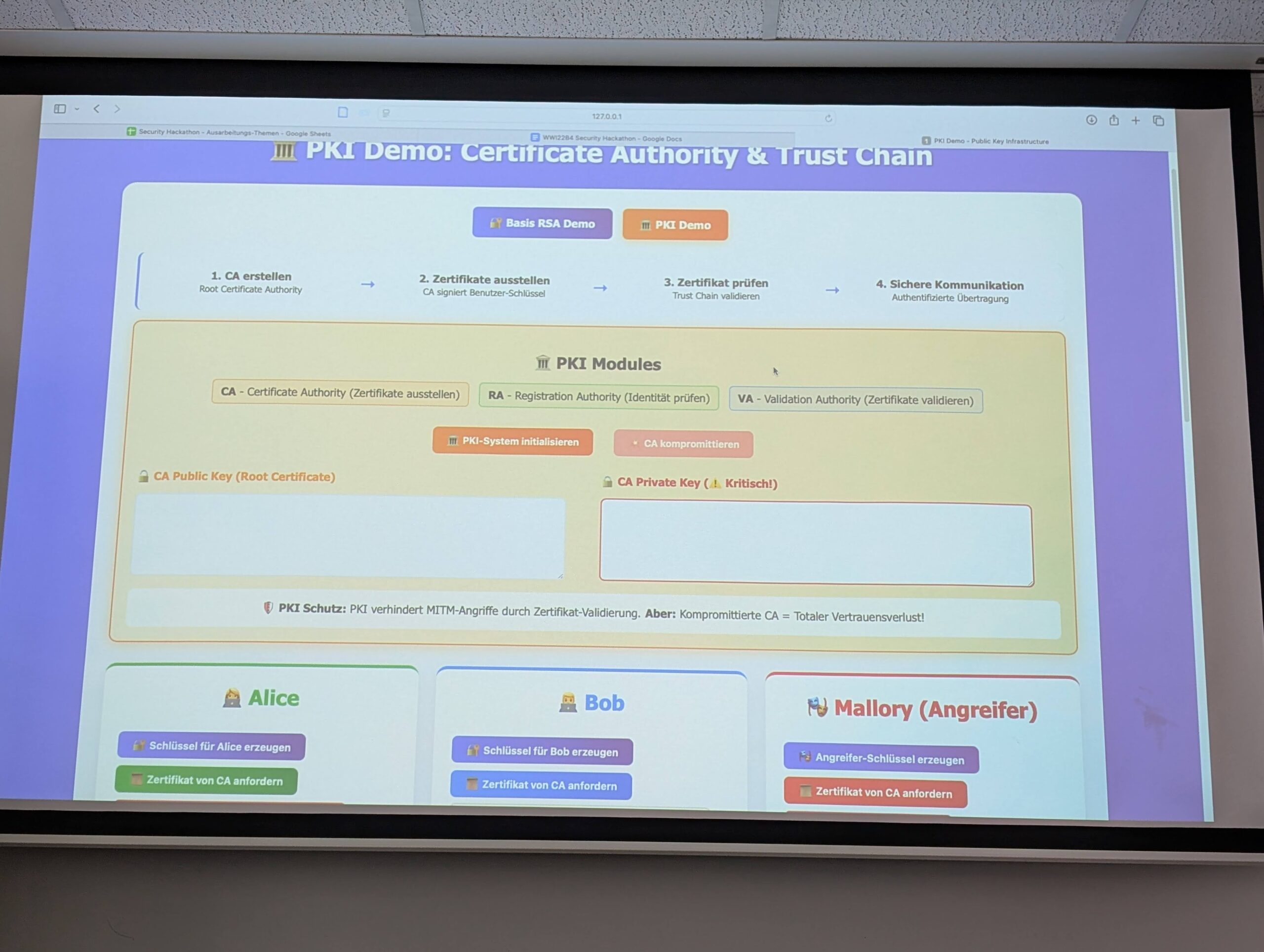

- A PKI crypto simulator demonstrating crypto concepts.

Each group selected their security topic, built an application to highlight it, and presented both the technical implementation and the proactive control it was addressing.

What About AI Tools Like Copilot and Cursor?

Some might be alarmed at the heavy usage of AI tools. I’m not.

In fact, what I saw gave me hope. The students weren’t blindly copy-pasting code from Copilot or trusting every suggestion. On the contrary – they shared stories about how:

- Copilot often misunderstood the security requirements.

- Cursor had to be prompted multiple times to deliver the desired logic.

- The tools made assumptions that needed to be corrected manually.

In other words: the students had to guide the AI, verify its output, and understand the domain well enough to know when the generated code was wrong.

That’s a skill.

Education Needs to Catch Up

There’s no going back. Tools like Copilot, Cursor, and ChatGPT are already integrated into how the next generation writes software. That means our job as educators is not to block these tools, but to:

- Teach students how to use them critically.

- Promote a deep understanding of the domain, so they can identify hallucinations and insecure patterns.

- Discuss the biases and limitations of machine-generated content.

Security education is not just about writing secure code anymore. It’s about understanding how code gets written today, and preparing students for that reality.

Creativity Through Acceleration

One thing AI tools did provide was acceleration. Students were able to prototype faster, make UIs look better, and test ideas that might’ve been too time-consuming otherwise. That creative boost is valuable especially in education, where time is limited and motivation matters.

But the key is this: you need the knowledge first. Without it, Copilot becomes a crutch. With it, it becomes a powerful assistant.

Final Thoughts

The takeaway from this semester is clear: AI-assisted coding is here to stay, and that’s not a bad thing if we equip students with the skills to use it responsibly.

Security education must evolve to:

- Embrace AI tools,

- Encourage verification,

- And foster creativity grounded in real technical understanding.

And maybe that’s the real proactive control we need to teach: how to control the tools, rather than be controlled by them.