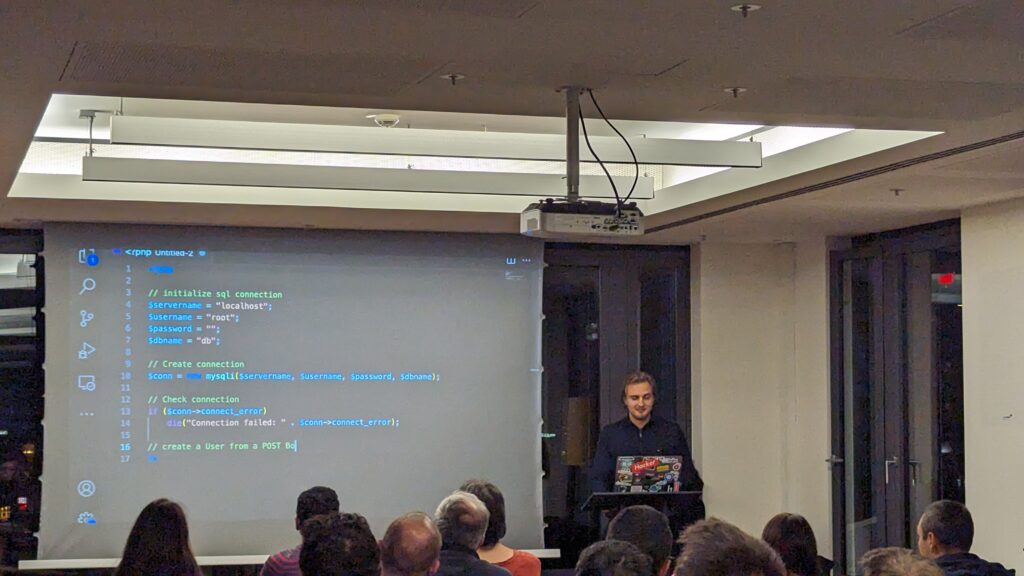

I had a great time last week at OWASP Frankfurt’s 63rd meetup all about #GenerativeAI and #Security!

We dived into deep fake detection and ways to bypass it – truly eye-opening.

We also explored the impact of AI generated code on software security with a GitHub Copilot case study.

Plus, plenty of pizza and some fantastic home-brewed beer by Check24.

If you’re in the Frankfurt area, definitely consider joining the OWASP Group on Meetup. It’s a fantastic way to meet and learn from like-minded individuals. Kudos to Dan Gora, Jonas Becker, and Jasmin Mair for their excellent work in organizing these events. The next one is already planned for February 2024 so don’t forget to join the Meetup group to stay notified!

Meetup: https://www.meetup.com/de-DE/owasp-frankfurt/

OWASP Chapter page: https://owasp.org/www-chapter-frankfurt/

Talk 1 – The Dark Side Of Large Language Models: Uncovering And Overcoming Of Code Vulnerabilities

Javan Rasokat – Senior Security Specialist at Sage, Researcher and Lecturer at DHBW, Germany

In his talk, Javan will discuss the role of large language models in cybersecurity, highlighting both their potential and pitfalls. Using GitHub Copilot as a case study, he’ll emphasize the importance of secure coding practices and manual code reviews in AI-driven development.

Talk 2 – Seeing is Not Always Believing: The Rise, Detection, and Evasion of Deepfakes

Niklas Bunzel, PhD student at TU-Darmstadt and research scientist at Fraunhofer Institute for Secure Information Technology (SIT) and the National Research Centre for Applied Cybersecurity – ATHENE

Raphael Antonius Frick, research fellow at Fraunhofer Institute for Secure Information Technology (SIT) and the National Research Centre for Applied Cybersecurity – ATHENE

In an era where digital information dominates, the saying “seeing is believing” has been cast into doubt with the emergence of deepfakes — AI-generated media that manipulates reality. This talk delves into the sophisticated technologies behind the creation of these deceptive videos and images. We’ll explore cutting-edge methods for detecting such manipulations, ensuring the authenticity of digital content. However, as detection techniques advance, so too do evasion strategies, including adversarial attacks and prompt injection methods. This poses the question: Can we still believe everything that we see?