In today’s digital age, data has become a valuable asset for organizations, and it is collected, processed, and stored at an unprecedented rate. This data contains sensitive personal information that should be kept private, and if not handled with care, can cause severe consequences for individuals and organizations. As a result, privacy engineering has emerged as a crucial discipline that focuses on designing, developing, and implementing systems that protect personal information from unauthorized access, use, disclosure, or destruction. In this article, we will discuss the importance of privacy engineering and its relationship with application security.

This Friday, I participated in Pioneer Friday, and I watched two enlightening videos on privacy engineering and its relation to application security. The first video, “A Taste of Privacy Threat Modeling” by Kim Wuyts, was presented at the Global AppSec Dublin event. The second video was a podcast hosted by Rob van der Veer that covered AI security and privacy guidelines. Both videos highlighted the importance of privacy in software development and the consequences of ignoring it.

After watching these videos, I realized that privacy should not be an afterthought in application security; instead, it should be integrated into the software development lifecycle. In product security, it is crucial to think beyond just being an “Application Security Specialist” and include privacy-minded thinking in threat modeling. The first video showcased some practical examples that can help one visualize privacy threats and the importance of considering privacy during product development. A interesting side fact, that is mentioned is, how users’ acceptance of a product is higher when privacy features are incorporated into the product.

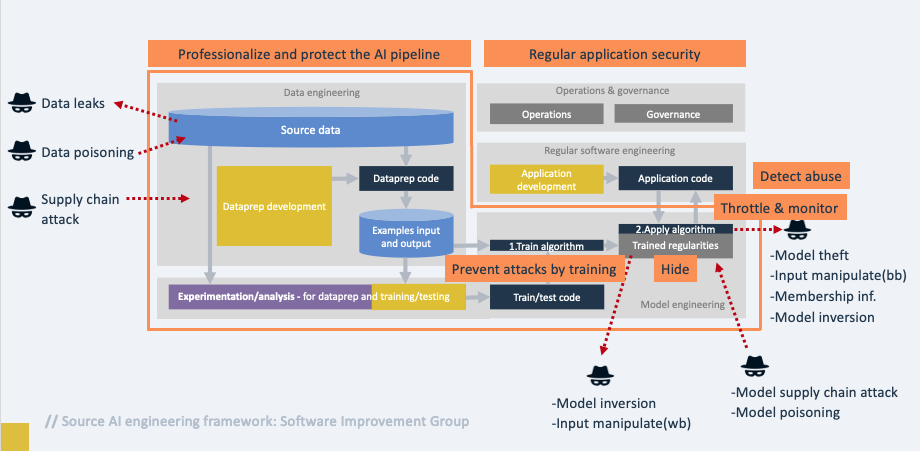

The videos also highlighted some real-life examples of privacy breaches that can occur in software development. One such example was the Bing AI search revealing classified data, which was a result of a model inversion attack. Another example was a toy puppet banned by the German ministry because it was classified as an espionage device by violating basic privacy principles. Additionally, the second video discussed the threat of input manipulation attacks and provided good reasons why successful large language models (LLM), such as ChatGPT, treat their dataset as “secret”.

Privacy and security are related but different concepts. While security focuses on protecting company assets from external attacks, privacy focuses on protecting personal data from attackers and internal “misbehaviour.” Privacy requires a different mindset and does not need to conflict with security. The LINDDUN threat model approach, which stands for Linking, Identifying, Non-Repudiation, Detecting, Data disclosure, Unawareness, and Non-Compliance, can be used to perform privacy threat modeling, which is similar to traditional application threat modeling.

Furthermore, the ISO/IEC 5338 standard provides guidelines for applying good software engineering practices to AI activities, such as versioning, documentation, testing, and code quality. Integrating AI developers, data scientists, and AI-related applications and infrastructure into security programs, such as risk analysis, training, requirements, static analysis, code review, and pentesting, can also be beneficial.

To summarize, privacy engineering should be an integral part of software development, and organizations should apply good software engineering practices to their AI activities. Privacy and security should be seen as complementary disciplines, and privacy-minded thinking should be integrated into threat modeling. By doing so, organizations can ensure that their software systems are secure, privacy-compliant, and user-friendly.

Further resources:

- https://owasp.org/www-project-ai-security-and-privacy-guide/ – The article image is taken from this guide.

- https://www.linddun.org/post/document-your-privacy-threat-modeling-with-owasp-threat-dragon – OWASP Threat Dragon supports LINDDUN.